In a world increasingly driven by multimodal interactions, the ability of machines to understand human non-verbal communication is no longer just a novelty—it’s a necessity. From touchless interfaces in sterile environments to immersive gesture-based commands in AR/VR, and even more nuanced understanding of human intent, hand gesture recognition (HGR) is emerging as a crucial element in creating truly seamless, human-centric digital experiences.

Traditionally, HGR relied heavily on classical computer vision and deep learning models trained specifically for visual data. But a new paradigm is emerging: leveraging the power of Large Language Models (LLMs) to not only process and reason about gestures but to fuse them with other inputs like speech, context, and structured data. This shift transforms gestures from mere commands into a rich layer of communication, enabling machines to understand us on a deeper, more intuitive level.

This blog explores how the intersection of hand gesture recognition and multimodal LLMs is revolutionizing the way machines understand non-verbal communication. We’ll delve into how recent advancements in Vision-Language Models (VLMs), such as Google’s Gemini, CLIP, Flamingo, and GPT-4V, can interpret and classify hand gestures, even with minimal supervision or fine-tuning, and integrate them into a holistic understanding of user intent. Whether you’re a researcher, developer, or enthusiast, this journey through the fusion of computer vision and natural language processing will show you how multimodal LLMs are shaping the future of intuitive human-computer interaction.

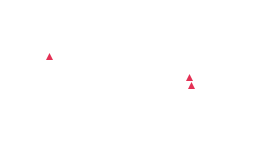

The Evolution of Human-Computer Interaction: From Touch to Intuition

Human-computer interaction has come a long way, constantly striving for more natural and efficient communication. Gesture recognition plays a pivotal role in this evolution, moving beyond simple button presses to truly intuitive interfaces.

- Glove-Based Interfaces: The Dawn of Direct Manipulation The journey began with data gloves, employing sensors to detect finger movements and hand positions. While groundbreaking, these systems were often expensive, cumbersome, and restrictive, limiting widespread adoption. They offered direct manipulation but lacked the seamlessness desired for everyday use.

- Vision-Based 2D Recognition: Breaking Free from Tethers To overcome the limitations of gloves, researchers moved towards camera-based hand tracking. Vision-based systems, using standard webcams, captured hand gestures in real-time. Techniques like grayscale conversion, edge detection, and optical flow enabled more natural interaction. However, challenges like background noise, inconsistent lighting, and limited depth perception often hindered performance.

- 3D Recognition with Depth Sensors: Adding a New Dimension The next significant leap came with 3D hand gesture recognition using range (depth) cameras (e.g., Microsoft Kinect, Intel RealSense). These cameras capture real-time 3D spatial data, enabling precise tracking of hand movements and poses in three dimensions. This technology allowed for more robust gesture recognition, opening doors for applications in gaming, simulation, and touchless control in sterile environments. While powerful, processing speed and lighting sensitivity remained areas for improvement.

- The Multimodal Leap: Beyond Pure Vision The true revolution arrives with the integration of advanced AI, particularly multimodal LLMs. This new era moves beyond simply recognizing a gesture to understanding what that gesture means in context, alongside other cues like speech, gaze, and environmental data. This is where HGR transcends simple input and becomes a vital component of genuinely intelligent interfaces.

From Scribbles to Solutions: How AI Understands What You Mean

At its core, the “math solver” is a prime example of multimodal interaction. It’s not merely recognizing a hand movement; it’s interpreting that movement as a form of writing (visual input), then understanding the language of mathematics within that visual content, and finally using a language model to reason and explain the solution in natural language. This powerful synergy is what makes the technology so compelling.

This kind of intelligence goes beyond simple pattern recognition. It blends visual understanding with language comprehension, enabling the system to extract meaning from what it sees and then communicate that meaning in a way we can understand.

In fact, we are sending two very different types of input to the same AI system. First, it receives a visual cue, whether that’s a handwritten equation, a sketched diagram, or even a finger-drawn symbol in the air. Then, it processes this either alongside or prior to a linguistic task, turning the visual content into structured knowledge that the language model can reason about.

Let’s break down how this works—and why it offers a glimpse into the future of human-computer interaction.

Method 1: Gesture as Language

- Real-Time Multimodal Input Fusion: A live video feed is captured (e.g., via OpenCV). Crucially, the system isn’t just seeing a hand; it’s also interpreting the user’s intent through the combination of gestures and the evolving visual “canvas.” The image is flipped to mirror the user’s movement, making it more natural for writing – a small but significant detail for intuitive interaction.

-

Contextual Hand Tracking and Gesture Interpretation: A custom HandDetector identifies hand landmarks, but the intelligence lies in contextualizing these detections.

- Index finger only: Signifies an intent to “draw” or “write.” This is akin to a spoken command to “start dictating.”

- All fingers up: A “clear” command, analogous to saying “erase everything.”

- Four fingers (excluding pinky): The “send” gesture. This isn’t just a physical action; it’s a non-verbal “submit” command, telling the system to process the accumulated visual information (the drawing).

- Dynamic Visual Canvas and Intent Capture: When the index finger is active, its position is tracked, simulating drawing on a virtual canvas. This isn’t just about rendering pixels; it’s about capturing the user’s evolving “thought” or “expression” in visual form. The system connects current and previous fingertip positions, creating a visual record of their input.

Method 2: Whiteboard as a Digital Canvas

- Direct Visual Input: As an alternative to gestures, the user can directly write their mathematical problem on a digital whiteboard created with HTML and CSS. This method offers a more traditional yet equally powerful way to provide visual input.

- Explicit User Intent: Instead of a physical gesture, the user signals their intent to proceed by clicking an “Evaluate” button. This action serves the same purpose as the “send” gesture: it tells the system that the visual input is complete and ready for processing.

- Capturing the Digital Ink: When the “Evaluate” button is clicked, the content on the HTML canvas is captured as an image. This image of the handwritten problem becomes the visual data payload, just like the final frame from the gesture-based drawing.

AI-Powered Multimodal Reasoning with LLMs (e.g., Gemini AI)

This is where the two methods converge. Whether the image comes from a gesture-drawn canvas or a digital whiteboard, it is encoded and passed to a powerful generative multimodal model like Google’s Gemini AI. The crucial element here is the prompt engineering: “Explain the solution to this math problem in detail.” This instructs the VLM to interpret the visual input as a math problem and then generate a textual explanation. This demonstrates the LLM’s ability to:

- Visually parse: Recognize characters and symbols in the handwritten image, regardless of the input method.

- Mathematical reasoning: Understand the logic of the equation.

- Natural Language Generation: Translate complex mathematical steps into clear, human-readable text.

Intelligent Response Parsing and Rich Feedback

Gemini’s textual response is intelligently split into a “question” (the interpreted math problem) and a “step-by-step explanation.” This content is then cleaned, formatted with HTML for readability, and sent back to the frontend. This complete feedback loop, combining the visual drawing with detailed textual analysis, creates a highly engaging and educational experience.

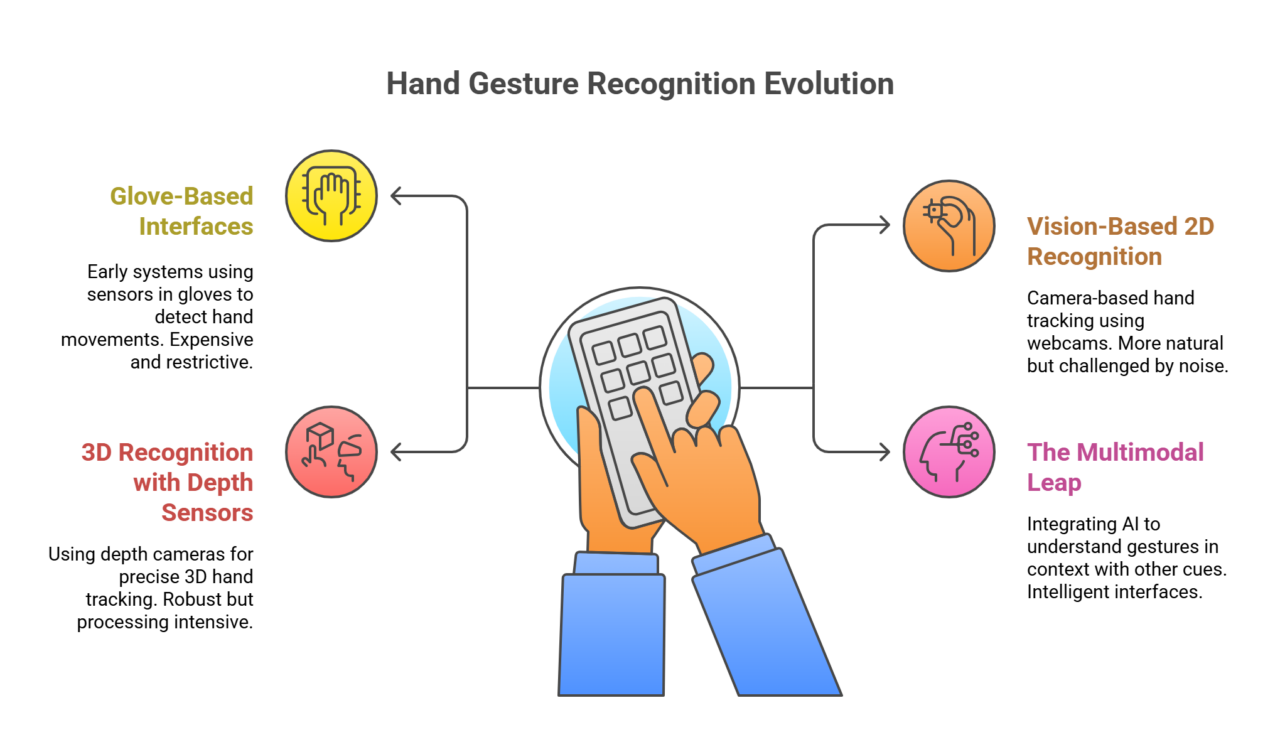

Beyond the Math Solver: A Glimpse into the Future of Interaction

While the math solver is a compelling demonstration, the true power of this multimodal approach lies in its broader applicability. It’s a foundation for a new generation of interfaces that understand us more naturally.

- Enabling Truly Natural User Interfaces (NUIs): The ability to combine gestures with voice, gaze, and even physiological signals (e.g., emotional states inferred from facial expressions) allows for interfaces that are truly intuitive, reducing the cognitive load and making technology accessible to a wider range of users, including those with disabilities.

- Enhanced Productivity and Creative Expression: Imagine gesturing to manipulate 3D models in CAD software, using “air-writing” or a quick sketch on a digital tablet that is instantly converted to text and summarized by an LLM, or using subtle hand movements to control music production software. The fusion of gesture and LLMs unlocks new avenues for creative and professional workflows.

- Immersive AR/VR Experiences: In augmented and virtual reality, natural gesture interaction is paramount. Multimodal LLMs can interpret complex sequences of gestures within these environments, allowing for highly immersive and responsive control of virtual objects, characters, and environments. This could mean “drawing” a virtual object into existence and then verbally instructing an AI to refine its design.

- Accessible and Inclusive Technology: This approach holds immense promise for assistive technologies. Consider users with motor impairments who could use subtle gestures or even eye movements interpreted by an LLM to control devices and communicate. Integrating text-to-speech for AI solutions also creates more inclusive learning experiences for individuals with visual impairments or reading difficulties.

- Adaptive and Context-Aware AI: Future systems leveraging multimodal LLMs will be able to adapt to user preferences and context in real-time. A simple gesture might mean one thing in a gaming environment and something entirely different in a professional setting, with the LLM understanding the nuances based on the active application and user history.

- Beyond Screen-Based Interaction: The math solver’s “drawing in the air” mode and the digital whiteboard highlight a critical future trend: reducing reliance on single, rigid input methods like keyboards. This technology paves the way for seamless interaction with ambient intelligence, smart environments, and wearable devices. Imagine controlling your smart home with a simple, universally understood hand gesture, or interacting with public displays without physical touch.

Conclusion: The Dawn of an Intuitive Digital Era

This project demonstrates a powerful fusion of flexible input methods—from gesture recognition to direct digital drawing—and AI-powered problem solving. It offers new, intuitive ways to interact with educational content and, more broadly, with digital systems. By leveraging hand gestures or familiar drawing interfaces, users can express ideas non-verbally, while multimodal LLMs like Gemini analyze these inputs and deliver detailed, context-aware responses in real-time.

Such a system not only showcases the possibilities of human-AI collaboration but also holds great promise in enhancing accessibility, engagement, and learning experiences across various domains. As gesture-based computing, multimodal AI, and generative AI continue to evolve, this prototype serves as a meaningful step toward more natural, intelligent, and truly human-centric interfaces that bridge the gap between our physical actions and the digital world. The future of interaction isn’t just about what we can type or touch; it’s about what we can express, and how intelligently machines can understand and respond.

About the Author

Aravind Narayanan is a Software Engineer with over 4 years of experience in software development, specializing in Python backend development. He is actively building expertise in DevOps and MLOps, focusing on streamlining processes and optimizing development operations. Aravind is also experienced in C++ and Windows application development. Among his versatile skill set, he is excited to explore the latest technologies in MLOps and DevOps, always seeking innovative ways to enhance and transform development practices.

Akhil K A is a Machine Learning Engineer with over 3 years of experience at FoundingMinds who focuses on AI and ML platform development. His expertise spans vision-based machine learning and Natural Language Processing. Leveraging frameworks like TensorFlow and PyTorch, Akhil creates advanced models and actively participates in ML competitions to remain at the forefront of artificial intelligence advancements. His work combines technical prowess with a commitment to pushing the boundaries of AI technology.

Kalesh, a graduate engineer, has been working as an Associate Software Engineer at FoundingMinds for the past three years. With a keen interest in managing data-related domains, he loves being involved in data handling for AI/ML projects. He possesses strong foundational knowledge in Python and SQL and is currently expanding his expertise in data analytics and engineering tools. Besides work, he has a personal interest in cooking new dishes, enjoys exploring different forms of art, and finds joy in engaging with friends and family.